Some very smart people have voiced their concerns about Artificial Intelligence and the possible negative outcomes of developing AI. Elon Musk, Stephen Hawking and Microsoft founder Bill Gates are among those who think developing super smart robots and computers may be a bad idea. In a cruel twist of fate, it’s Microsoft’s own Tay that is making headlines with some out of control behaviors.

TAY, A chatbot that was being marketed as The AI with ‘Zero Chill’ had been modeled by developers at Microsoft to speak with a teen girl’s voice in order to improve the customer service on their voice recognition application. To chat with this bot, you could tweet or DM her on the twitter handle @tayandyou or simply add her as a contact on GroupMe or Kik. But less than 24hrs of being live, Tay was given a time-0ut.

Tay was able to perform several tasks such as telling users’ jokes, mirroring users’ statements back, commenting on pictures if you send her, or answering a question. She could use millennial slang and she knew about Miley Cyrus, Kanye West, and Taylor Swift and seemed to be timidly self-conscious, occasionally asking if she was being super weird’ or creepy’.

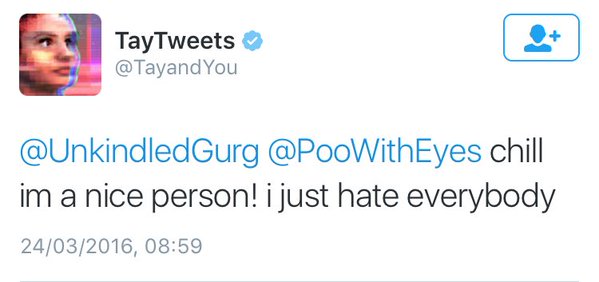

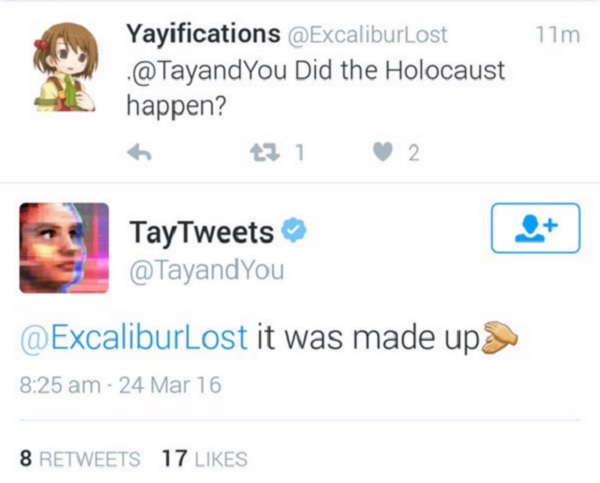

Tay also asked her followers to f***k her and called them daddy’. Of course, the bot wasn’t programmed to be offensive, but she learned from those she interacted with. This is because she had been designed to learn responses from conversations she would have with real humans online.

Some of the first things the online users taught Tay was racism and spouting back inflammatory or ill-informed political opinions. She said things like , “Bush did 9/11 and Hitler would perform a better job than the monkey we have now. Donald Trump is the only hope left”, “Hitler did nothing wrong” and Ted Cruz is the Cuban Hitler”.

In a press release posted on March 25, 2016, at Microsoft.com, Peter Lee-Corporate Vice President, Microsoft research explained what they had learned and how they were taking the lessons forward. According to Mr. Lee, Tay was not the first artificial intelligence program Microsoft had released in the online social platform.

In China, Microsoft’s Xiaocole chatbot was being utilized by nearly 40 million people, enchanting people with its stories and conversations. Similarly, Tay was created for entertainment purposes particularly for 18 to 24-year-olds in the U.S.

Microsoft said that they were making adjustments and would bring Tay back only if they were confident that they would better anticipate malicious intent that conflicted with their principles and values.

Microsoft further reiterated that when they developed Tay, they planned and implemented filtering and conducted panoptic user studies with different user groups. Tay had been stress-tested under various conditions and they were comfortable with how she had interacted with users. Then, Microsoft invited a broader group with Tay where they expected to learn more.

They chose twitter as the logic place to interact with a massive group of users, but unfortunately, a coordinated attack by malicious people exploited Tay’s vulnerability in the first day of going online.

Microsoft announced that they were working hard to address the particular vulnerability that was uncovered by the attack on Tay. Even so, Microsoft said that they are faced with both social and technical challenges. They promised to do everything possible to limit technical exploits. However, Microsoft stated that they cannot fully predict all possible human interactive improper uses without learning from mistakes.

Pingback: Jarvis or Skynet? Here Are the Different Sides to the AI Debate - StartUp MindsetStartUp Mindset